Introduction

Quantum Computing (QC) is a computational paradigm where Quantum Mechanics (QM) is exploited for encoding information and doing calculations. The unit of information, named qubit, is physically mapped onto a quantum physical quantity as electron spin, instead of a classical one as voltage, and it can be measured in one of the basis states of the host system, e.g. one of spin’s orientations. Superposition and entanglement are the fundamental principles of QM giving to quantum computers some characteristic features which make them more computationally efficient than classical computers for solving hard problems in optimization, search and simulation areas.

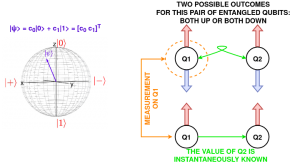

Superposition ensures that before any measurement a quantum state is generally represented by a combination of two or more basis states, each one with a potential non-null probability of being measured, so a qubit is described by the superposition of two states encoding 0 and 1. For what concerns computing, this implies that on a N-qubit computer the same operation can be simultaneously evaluated on 2N input values/data, each one associated to a potential quantum state. In addition to superposition, mechanisms of interference are exploited for increasing the probability of measuring the problem’s solution.

Entanglement is a property of quantum systems made of more sub-systems (e.g. more qubits) which could be subjected to an intrinsic correlation making impossible their individual analysis. In Quantum Information domain entanglement implies that the measurement result for a qubit immediately affects the value of other qubits and this property can be exploited for reducing the number of operations required for reaching a solution.

Some problems have been already proved to be solved more efficiently on a quantum computer than a classical one, as integer prime factoring with Shor’s algorithm (polynomial cost instead of exponential) and disordered database search with Grover’s algorithm (proportional to the square root of the number of elements in the dataset instead of linear with the number of elements). Moreover, quantum computers with some tens of qubits have been already fabricated with different technologies, as solid-state, atomic and molecular. Even though the previous mechanisms and simple quantum algorithms have been experimentally proved, current hardware shows intrinsic limits due to the interaction with the external environment, which can affect all the benefits acquired with the QM principles. Dynamic effects as relaxation and decoherence can gradually increase computational errors and consequently reduce the probability of measuring the state associated to the problem’s solution.

Engineering QC technologies is necessary for fabricating many-qubits, fault-tolerant quantum computers.

Methodology

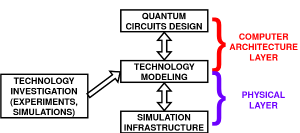

QC technologies can significantly differ in terms of polarization parameters (temperature, magnetic static fields), bandwidth of EM signals employed for the implementation of quantum gates, timescales of non-ideality phenomena, native gates, fabrication and maintenance costs. Their analysis is done at VLSI Lab by employing a methodology derived from that employed for beyond-CMOS classical technologies, where each technology is evaluated on its own and compared with others and on multiple levels.

Multi-level analysis, where devices and circuits are not treated separately, is required in order to ensure effective design of QC technologies and architectures. In fact, on the one hand the limitations of a new device can be effectively defined only in concrete use-cases with complex circuits, on the other concrete circuit design depends on the strengths and limitations of a technology. This assisted approach permits to enhance the quality of design at both levels, with the consequent discovery of new quantum technologies and computer architectures.

Simulation Infrastructure

The methodology is concretely implemented in a classical simulation infrastructure for comparing QC technologies based on three levels: starting from a common simulation infrastructure – evaluating the evolution of each quantum state due to electro-magnetic fields for the implementation of gates and common non-idealities as relaxation and decoherence – a specific simplified model of each technology is developed and interfaced with the lower level, taking into account the main and eventually exclusive features of each system and their dependencies on external parameters, as magnetic static fields and temperature, derived from experiments or physical (e.g. ab-initio) simulations. In order to simplify quantum circuit design to users, the current infrastructure shows an interface with the quantum Hardware Description Language called OpenQASM.

This infrastructure is going to be interfaced with Quantum Computing frameworks as Qiskit and Cirq in future.

Analyzed technologies

The current features of each quantum technology (timescales of relaxation, decoherence, qubits connectivity, etc.) do not permit to determine which would be the leader for fabricating quantum computers. For these reasons, any quantum technology cannot be excluded a-priori. Quantum technologies can be roughly divided into three categories: molecular, atomic and solid-state. Each sub-group could encode quantum information onto different physical quantities, as electron spin, photon polarization and quantized current. The research carried on at the VLSI Lab is going to analyze all these technologies, with the intention to integrate them in the simulation infrastructure.

Molecules

Molecular quantum computers encode information onto spins of nuclei or electrons to be manipulated through magnetic resonance techniques.

Nuclear Magnetic Resonance (NMR) molecules constitute at the end of 1990s the first physical apparatus for experimentally proving quantum algorithms: encoding quantum information on nuclear spins ensured decoherence timescales in the order of second and the possibility of implementing an universal set of quantum gates by exploiting direct interaction between spin qubits; however, they suffered of bad qubits scalability and long durations of multi-qubit gates.

In order to overcome traditional NMR limitations, molecular nanomagnets have been chemically engineered for QC since the beginning of 2010s in order to ensure long coherence timescales and scalability through supramolecular techniques. In these devices qubit is usually encoded onto a global spin of a multi-atom system and multi-qubit gates can be implemented with either a direct spin-spin coupling like in NMR or the assistance of intermediate spins called switches, thus having an indirect coupling between qubits which permits to implement faster multi-qubit gates.

Both NMR-like and switch-based molecular technologies have been analyzed at VLSI Lab. It is important to remind that there is not a dichotomy between an old technology (NMR) and a new technology (switch). In fact, as previously written, molecular nanomagnets can belong to both sets. The main differences between them are related to the type of coupling (direct/indirect) – which influences the duration of the quantum gate – and the connectivity of the quantum architecture (theoretically full in NMR, depending on the location of spin switches – currently linear – in the other case). These differences will also affect the circuit design; in fact, the compilations of the same quantum circuit on these devices could be significantly different and it is important to compare them in order to define a better technological approach for solving a given problem.